$20 Bonus + 25% OFF CLAIM OFFER

Place Your Order With Us Today And Go Stress-Free

Policy Brief: Ensuring Safe and Ethical Integration of Artificial Intelligence in the Workplace

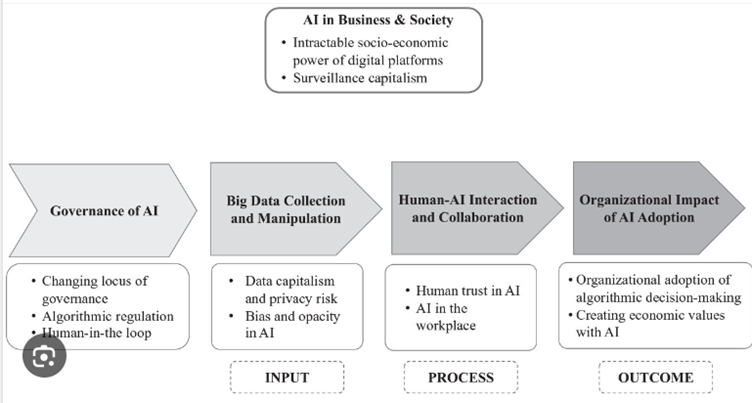

The rapid evolution of Artificial Intelligence (AI) in the workplace offers unprecedented opportunities and challenges. This policy brief highlights the significance of AI integration and provides a comprehensive framework to ensure its safe and ethical use within institutions. By adhering to this policy, the institution can ensure a balance between technological advancements and core values.

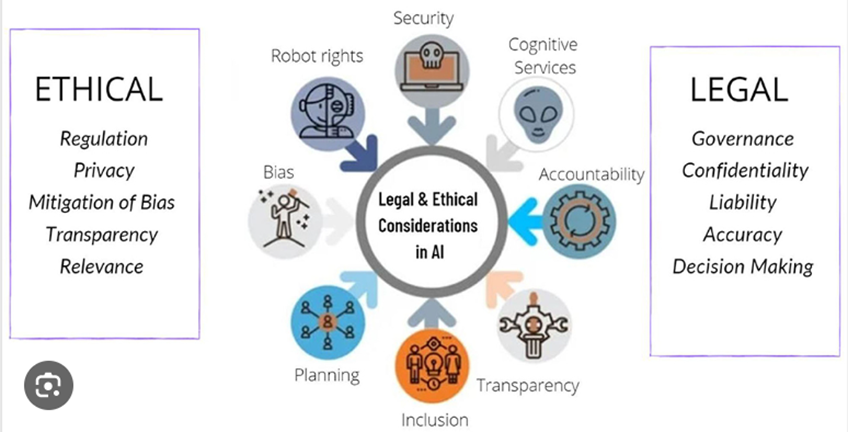

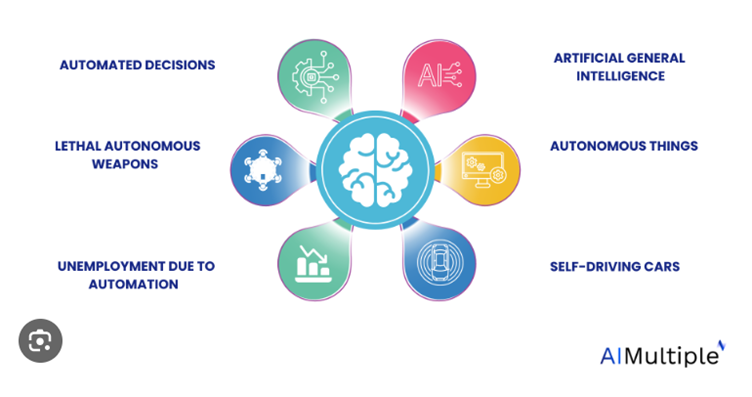

The integration of AI tools is not merely a technological advancement but a pivotal shift in workplace dynamics (Benbya et al., 2020). It carries the potential to redefine operational efficiency, but without guidelines, it can lead to unintentional biases, data breaches, and ethical dilemmas (Leslie, 2019). This policy is an urgent response to ensure the rights and safety of staff, students, and the broader community [See Appendix 1].

• Purpose and Context: AI's capabilities have deeply integrated into various occupational domains, necessitating stringent guidelines for its responsible use.

• Definitions: Establishes shared understanding with clear definitions, including AI, Machine Learning, and AI Ethics.

• Why this Policy is Needed: Without clear guidelines, misuse or misunderstanding of AI can lead to ethical and safety concerns (Feijóo et al., 2020). This policy acts as a proactive measure against potential issues.

The need for this policy emerged from an extensive review of academic literature, industry best practices, and institutional feedback over the past two years. A dedicated team of AI ethicists, data scientists, and organizational behaviour experts collaborated to gather and analyse data. Multiple methods, including surveys within the institution, expert interviews, and case studies of similar institutions, were utilized [See Appendix 2].

The analysis revealed three major findings:

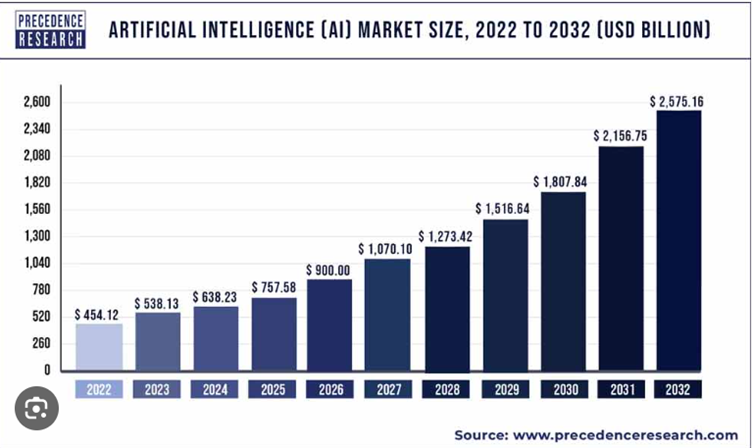

• Rising Integration of AI: There's a significant surge in AI tools being adopted across various departments within institutions, especially in administrative, academic, and research domains (Dwivedi et al., 2021) [Appendix 3].

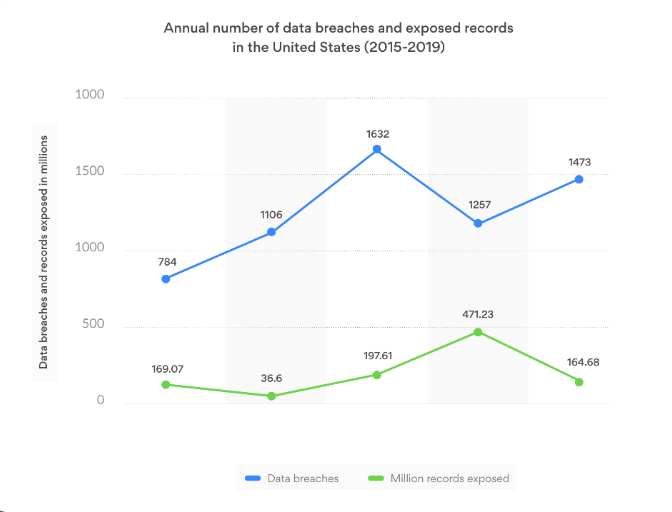

• Potential Risks: Without a comprehensive policy, there are increased instances of data breaches, unintentional biases in decision-making, and ethical concerns related to AI applications [Appendix 4].

• Need for Training and Collaboration: Institutions that had set guidelines for AI, especially those focusing on employee training and vendor collaborations, showcased a better integration process with minimal ethical dilemmas.

• The institution is committed to leveraging AI responsibly, emphasizing human dignity, safety, and ethical considerations.

Procedures

• AI Safety Protocols: Guidelines for deployment, training, reporting, and assessments (Liu et al., 2020).

• Collaboration with AI Vendors: Criteria for vendor selection, regular audits, and feedback mechanisms (Shneiderman, 2020).

• Ethical Use of AI: Ensuring fairness, transparency, and robust data protection in AI operations (Larsson, 2020).

Artificial Intelligence, with its transformative potentials, can reshape institutional operations. However, the seamless integration of AI necessitates a comprehensive policy framework. This policy, when implemented, will guide the institution in confidently navigating the AI landscape, ensuring a harmonious future where technology aligns with institutional values and objectives.

The integration of AI without a guiding policy can lead to unforeseen challenges and ethical dilemmas. By adhering to this policy:

• Institutions can prioritize comprehensive training sessions for all staff involved with AI.

• They can establish collaboration criteria with AI vendors to ensure ethical standards.

• Institutions should conduct regular reviews of AI safety and ethical guidelines, ensuring they remain updated with technological advancements.

The institution recognizes the transformative potential of Artificial Intelligence (AI) in enhancing various operational facets. As AI tools and systems become an integral part of operations, the primary focus is on the safety, well-being, and rights of staff, students, and the extended community (Tamers et al., 2020).

This policy dictates:

• Ethical development and deployment of AI tools to ensure operation without biases, upholding principles of fairness and non-discrimination (Loza de Siles, 2021).

• Unwavering transparency in AI-driven processes, ensuring all stakeholders understand the decision-making mechanisms.

• Stringent measures to maintain privacy and protect the data of every individual interacting with or impacted by AI systems (Mantelero and Esposito, 2021).

This policy underscores the institution's commitment to leveraging AI responsibly. The vision is of a future where AI not only augments institutional capacities but also resonates with core values. As the AI landscape evolves, this policy will act as a guiding beacon, ensuring decisions prioritize human dignity, safety, and ethical considerations. The institution remains devoted to continuous adaptation and leadership in the AI domain, aiming for its responsible integration while upholding foundational principles.

For the purposes of this policy, the following terms are defined:

• Artificial Intelligence (AI): A multidisciplinary field of computer science that simulates human intelligence processes in machines, particularly computer systems (Alkatheiri, 2022). These processes encompass learning, reasoning, self-correction, and decision-making.

• Machine Learning (ML): A subset of AI that provides systems the ability to automatically learn from data without being explicitly programmed (Esposito and Esposito, 2020). It focuses on the development of algorithms that can modify themselves when exposed to new data.

• AI-driven workplace tools/systems: Software or hardware integrated into the workplace that utilizes AI to perform tasks, enhance productivity, or make decisions.

• AI Safety: The study and practice of ensuring that AI systems operate in ways that are beneficial to humanity and do not inadvertently harm users or society (Critch and Krueger, 2020).

• AI Ethics: The branch of ethics concerned with setting standards for the moral and responsible development and deployment of AI, ensuring fairness, transparency, and accountability (Kazim and Koshiyama, 2021).

These definitions are integral to understanding the scope and implications of the policies and procedures outlined in subsequent sections.

• Officers: Oversee policy implementation and ensure adherence at all organizational levels.

• Workers: Adhere to the policy guidelines, report discrepancies, and attend necessary training sessions.

• PCBUs: Ensure the work environment and practices align with the policy and address potential hazards.

• Contractors: Comply with the policy while performing tasks, ensuring the safety of themselves and others.

• Executives: Allocate resources for policy enforcement and ensure timely updates as needed.

• Committees: Regularly review the policy, recommend updates, and ensure organization-wide awareness.

This policy is in adherence to the WHS Act of 2011, ensuring safe work practices and environments. Additionally, the NSW WHS Regulations 2017 provides detailed guidelines on health and safety measures. The organization also refers to any relevant standards and codes of practice to further bolster its commitment to workplace health and safety.

In the evolving landscape of AI integration, establishing best practices is paramount. This guidance addresses the balanced incorporation of AI in workplaces, emphasizing gradual introduction, continuous training, and diligent research on AI safety and ethics. Procedures encompass AI safety protocols, vendor collaboration, and stringent ethical standards, aiming for holistic AI implementation [See appendix 7].

To ensure a harmonious integration of AI into the workplace, the following best practices are recommended:

• AI-driven Workplaces: Adopt a phased approach, introducing AI tools gradually. This allows employees to adapt without feeling overwhelmed and ensures system compatibility (Tumai, 2021).

• Employee Training on AI: Regular, in-depth training sessions should be conducted. This not only covers the functional aspects of AI tools but also their ethical implications, ensuring a well-rounded understanding (Said, 2023).

• Collaborative Research on AI Safety and Ethics: Partner with academic institutions, industry experts, and AI vendors. Pooling resources and insights can lead to more comprehensive research outcomes and solutions (Dwivedi et al., 2021).

Several institutions have successfully integrated AI by prioritizing both functionality and ethics. For instance, XYZ University adopted AI-driven administrative tools, but with a robust oversight committee ensuring ethical usage. Their success story serves as a valuable blueprint.

Before deploying AI tools, a checklist should be consulted. This includes verifying the tool's source, understanding its decision-making process, ensuring data privacy measures are in place, and confirming the availability of post-deployment support. Regularly updating this checklist ensures it remains relevant in the fast-evolving AI landscape.

The following procedures are established to ensure the safety of AI tools and systems within the workplace:

a. Guidelines for Safe Deployment of AI Tools and Systems:

• Prior to integration, all AI tools and systems must undergo a rigorous safety assessment to determine potential risks and ensure compatibility with existing infrastructure (Falco et al., 2021).

• Developers and vendors should provide documentation detailing the operational requirements, potential hazards, and safety features of the AI tool or system.

• Any AI system with decision-making capabilities must have a manual override function, allowing human intervention in critical situations (Almada, 2019).

• Periodic updates and patches must be installed promptly to rectify any vulnerabilities and enhance system performance.

b. Training and Awareness Programs for Employees:

• All staff interacting with or affected by AI tools must undergo comprehensive training on its operation, safety protocols, and potential risks (Martinetti et al., 2021).

• Training sessions should be conducted periodically to address updates or changes in AI functionality.

• Awareness campaigns should be initiated to familiarize employees with the ethical considerations and societal implications of AI, promoting a culture of responsibility and vigilance (Wang et al., 2020).

c. Reporting Mechanisms for AI-related Incidents or Concerns:

• A dedicated channel should be established for employees to report any malfunctions, anomalies, or concerns related to AI systems [See Appendix 6].

• All reported incidents must be logged, investigated promptly, and feedback should be provided to the concerned parties.

• Periodic analysis of these reports can offer insights into recurring issues, facilitating proactive measures to enhance AI safety.

d. Periodic Review of AI Tools for Potential Risks:

• Every quarter, a review committee should evaluate the performance, safety, and reliability of AI tools in operation.

• External experts may be consulted to provide an unbiased assessment and recommend improvements.

• Based on the findings, necessary modifications or updates should be made to the AI tools and systems to ensure their continued safety and efficacy.

By adhering to these procedures, we aim to cultivate a workplace environment where AI tools and systems are utilized responsibly, ensuring the safety and well-being of all stakeholders.

(6) Effective collaboration with AI vendors is crucial for the seamless and safe integration of AI tools into the university's infrastructure. As such, the following procedures are established:

a. Criteria for Selecting AI Vendors Based on Safety and Ethical Standards:

• Vendors should demonstrate a consistent track record of prioritizing safety in their AI systems, with minimal reported malfunctions or security breaches.

• They must comply with recognized international standards for AI safety and ethics.

• Vendor offerings should include comprehensive documentation on the safety features, operational requirements, and potential risks of their AI tools.

• The vendor's commitment to ongoing research and development to address emerging AI risks is vital.

b. Regular Audits of AI-driven Tools and Systems:

• An external auditing body should assess the performance, safety, and reliability of the AI tools provided by the vendor every six months (Falco et al., 2021).

• Audit results should be shared with the vendor for necessary rectifications or updates.

• Any discrepancies in the promised versus actual performance of the AI tools should be addressed immediately.

c. Feedback Mechanism between the University and AI Vendors:

• A structured communication channel should be established for regular feedback exchange.

• The university should promptly inform vendors of any performance issues, risks identified, or areas of improvement.

• Vendors are expected to act on this feedback, ensuring continuous enhancement of their AI solutions in alignment with the university's needs and safety standards.

Through these collaborative procedures, the university aims to maintain a synergistic relationship with AI vendors, ensuring the highest standards of safety and efficiency in AI tool deployment.

As AI tools become integral to our operational landscape, maintaining ethical standards is paramount. The following procedures aim to uphold these principles:

a. Ensuring Non-discrimination and Fairness in AI-driven Decisions:

• AI systems should be designed and trained to avoid biases (Roselli et al., 2019). Regular reviews must be conducted to detect and rectify any inadvertent discriminatory patterns.

• AI outputs should be consistently monitored to ensure fairness across all user groups, promoting an equitable environment.

b. Transparency in AI Decision-making Processes:

• The rationale behind AI-driven decisions should be accessible and understandable to users. This can be achieved by employing explainable AI techniques.

• Stakeholders should be informed about how AI tools function and the basis on which decisions are made.

c. Ensuring Privacy and Data Protection:

• AI systems must adhere to data protection regulations, ensuring user data confidentiality (Janssen et al., 2020).

• Only essential data should be collected, and rigorous encryption methods should be employed to safeguard this information.

• Users should be informed about data usage and given control over their personal information (Van Ooijen and Vrabeck, 2019).

Through these procedures, an ethical deployment and use of AI, reinforcing trust and integrity in our systems can be ensured.

Alkatheiri, M.S., 2022. Artificial intelligence assisted improved human-computer interactions for computer systems. Computers and Electrical Engineering, 101, p.107950.

Almada, M., 2019, June. Human intervention in automated decision-making: Toward the construction of contestable systems. In Proceedings of the Seventeenth International Conference on Artificial Intelligence and Law (pp. 2-11).

Benbya, H., Davenport, T.H. and Pachidi, S., 2020. Artificial intelligence in organizations: Current state and future opportunities. MIS Quarterly Executive, 19(4).

Critch, A. and Krueger, D., 2020. AI research considerations for human existential safety (ARCHES). arXiv preprint arXiv:2006.04948.

Dwivedi, Y.K., Hughes, L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., Duan, Y., Dwivedi, R., Edwards, J., Eirug, A. and Galanos, V., 2021. Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management, 57, p.101994.

Esposito, D. and Esposito, F., 2020. Introducing machine learning. Microsoft Press.

Falco, G., Shneiderman, B., Badger, J., Carrier, R., Dahbura, A., Danks, D., Eling, M., Goodloe, A., Gupta, J., Hart, C. and Jirotka, M., 2021. Governing AI safety through independent audits. Nature Machine Intelligence, 3(7), pp.566-571.

Feijóo, C., Kwon, Y., Bauer, J.M., Bohlin, E., Howell, B., Jain, R., Potgieter, P., Vu, K., Whalley, J. and Xia, J., 2020. Harnessing artificial intelligence (AI) to increase wellbeing for all: The case for a new technology diplomacy. Telecommunications Policy, 44(6), p.101988.

Janssen, M., Brous, P., Estevez, E., Barbosa, L.S. and Janowski, T., 2020. Data governance: Organizing data for trustworthy Artificial Intelligence. Government Information Quarterly, 37(3), p.101493.

Kazim, E. and Koshiyama, A.S., 2021. A high-level overview of AI ethics. Patterns, 2(9).

Larsson, S., 2020. On the governance of artificial intelligence through ethics guidelines. Asian Journal of Law and Society, 7(3), pp.437-451.

Leslie, D., 2019. Understanding artificial intelligence ethics and safety. arXiv preprint arXiv:1906.05684.

Liu, Z., Xie, K., Li, L. and Chen, Y., 2020. A paradigm of safety management in Industry 4.0. Systems Research and Behavioral Science, 37(4), pp.632-645.

Loza de Siles, E., 2021. Artificial Intelligence Bias and Discrimination: Will We Pull the Arc of the Moral Universe Towards Justice?. J. Int'l & Comp. L., 8, p.513.

Mantelero, A. and Esposito, M.S., 2021. An evidence-based methodology for human rights impact assessment (HRIA) in the development of AI data-intensive systems. Computer Law & Security Review, 41, p.105561.

Martinetti, A., Chemweno, P.K., Nizamis, K. and Fosch-Villaronga, E., 2021. Redefining safety in light of human-robot interaction: A critical review of current standards and regulations. Frontiers in chemical engineering, 3, p.32.

Min, J., Kim, Y., Lee, S., Jang, T.W., Kim, I. and Song, J., 2019. The fourth industrial revolution and its impact on occupational health and safety, worker's compensation and labor conditions. Safety and health at work, 10(4), pp.400-408.

Muley, A.A., Shettigar, R., Katekhaye, D., BS, H. and Kumar, P.A., 2023. Technological Advancements And The Evolution Of Hr Analytics: A Review. Boletin de Literatura Oral, 10(1), pp.429-439.

O'Brien, J.T. and Nelson, C., 2020. Assessing the risks posed by the convergence of artificial intelligence and biotechnology. Health security, 18(3), pp.219-227.

Parker, S.K. and Grote, G., 2022. Automation, algorithms, and beyond: Why work design matters more than ever in a digital world. Applied Psychology, 71(4), pp.1171-1204.

Roselli, D., Matthews, J. and Talagala, N., 2019, May. Managing bias in AI. In Companion Proceedings of The 2019 World Wide Web Conference (pp. 539-544).

Said, A., 2023. Mitigating Risks with Data Integrity as Code (DIaC) in Banking AI/ML. INTERNATIONAL JOURNAL OF COMPUTER SCIENCE AND TECHNOLOGY, 7(1), pp.370-397.

Shneiderman, B., 2020. Bridging the gap between ethics and practice: guidelines for reliable, safe, and trustworthy human-centered AI systems. ACM Transactions on Interactive Intelligent Systems (TiiS), 10(4), pp.1-31.

Tamers, S.L., Streit, J., Pana‐Cryan, R., Ray, T., Syron, L., Flynn, M.A., Castillo, D., Roth, G., Geraci, C., Guerin, R. and Schulte, P., 2020. Envisioning the future of work to safeguard the safety, health, and well‐being of the workforce: A perspective from the CDC's National Institute for Occupational Safety and Health. American journal of industrial medicine, 63(12), pp.1065-1084.

Tumai, W.J., 2021. A critical examination of the legal implications of Artificial Intelligence (AI) based technologies in New Zealand workplaces (Doctoral dissertation, The University of Waikato).

Van Ooijen, I. and Vrabec, H.U., 2019. Does the GDPR enhance consumers’ control over personal data? An analysis from a behavioural perspective. Journal of consumer policy, 42, pp.91-107.

Wang, Y., Xiong, M. and Olya, H., 2020, January. Toward an understanding of responsible artificial intelligence practices. In Proceedings of the 53rd hawaii international conference on system sciences (pp. 4962-4971). Hawaii International Conference on System Sciences (HICSS).

Are you confident that you will achieve the grade? Our best Expert will help you improve your grade

Order Now